“How are we showing up in AI?”

If your business relies on content and SEO for marketing, you’ve probably been asking (or getting asked) this question a lot. And if you’re going to answer this question, you need an AI visibility tool.

There are a lot of these tools out there on the market. Some are stronger than others, and some just have bigger marketing budgets behind them.

So Overthink Group General Manager Zoe Tjoelker and I tested seven of the leading AI visibility tools in this space to find the pros and cons of each.

We’ll cover:

- The best AI visibility tools, ranked

- An overview of how AI visibility tools work

- Our ranking methodology

- Key capabilities to look for in AI visibility tools

- The pros and cons of all 7 AI visibility tools

- Bottom line: be strategic with sample prompts

The best tools for AI and LLM visibility, ranked by people who used them

We tested seven of the leading tools in this category, rating them on a weighted scale of 1–5 based on their ability to perform 20 key marketing tasks. Here are the results up front.

| The Best AI Visibility Tools (Dec. 2025) | |||

| Rank | AI visibility tool | Score | Notes |

| 1 | Profound | 3.6 | Best at parameter definition, strong segmentation architecture, and in-tool URL tracking |

| 2 | Scrunch | 3.4 | Best at segmentation architecture, strong parameter definition, intuitive filters and labelling |

| 3 | Peec | 3.2 | Unlimited seats and countries at lowest pay tiers, decent parameter definition |

| 4 | Rankscale | 2.9 | Best at sentiment analysis, decent parameter definition |

| 5 | Otterly | 2.8 | Strong parameter definition |

| 6 | Semrush AIO | 2.2 | Strong vision, weak execution |

| 7 | Ahrefs Brand Radar | 1.1 | Best vision, spurious methodology |

We used all these tools on a project exploring a small enterprise B2B SaaS company’s AI visibility (see the methodology here).

Remember: the space is rapidly changing, and these tools are adding new capabilities all the time. (Heck, Semrush announced their acquisition by Adobe as I was writing this report.)

We haven’t been paid by any of these companies, and we’re not affiliated with any of them beyond our use of these tools.

You know who does pay us to do stuff like this? Our clients. We create scoring rubrics like these for our clients to help them show up in both LLMs and traditional search. If you’d like to make comparison-stage product category content like this, we’d love to help.

AI visibility tools: what they are and how they work

AI visibility tools (a.k.a. AEO tools, a.k.a. LLM visibility tools, a.k.a. GEO tools … you get it) promise users the ability to discover, track, and improve the ways their brands show up in AI search channels. The idea is that if you can better understand how your brand is showing up in ChatGPT, Perplexity, Gemini, Google AI Overviews, and the like, you’ll be able to influence these channels to mention your product and cite your content more often.

If these tools’ websites are to be believed, improving AI visibility is a key way to discover and reach new audiences.

But that’s all just good copywriting. The end benefit of using these tools may be to get more customers and reach more people. But just as we know using Ahrefs won’t guarantee a spike in organic web traffic, we can’t expect a spike in brand mentions on Perplexity just because we have a Profound account.

So the key question is, “How do AEO tools help us do better AEO?”

To answer that, let’s take a brief look at what it even means to measure AI visibility.

Absolute AI visibility is impossible to know

Let’s say you want to know exactly how your brand shows up on these AI platforms. To learn this, you would need to do the following:

- Access every single response to every single prompt made by every single user on every single platform.

- Filter prompts down to the ones that are relevant to you.

- Filter responses down to the ones that mention your offerings and/or cite your content.

- Organize that data, labeling entities mentioned, citations, sentiment, themes, etc.

- Analyze the dataset for insights.

- Repeat steps 1–5 over and over again to gauge consistency and spot trends.

Step 1 is a non-starter: you don’t have access to that kind of data, and right now nobody will sell it to you.

But even if all that data were available to you, it would be prohibitively, unjustifiably expensive.

Approximate AI visibility is more manageable to obtain

Since you can’t know your exact AI visibility, you settle for learning how your brand shows up in responses to the kinds of relevant prompts people would ostensibly plug into these platforms. Now your task list looks like this:

- Create a set of sample prompts you would want to elicit AI responses that mention your brand or product and/or cite your content.

- Submit those sample prompts to the AI channels your audience uses and capture the responses.

- Organize that data, labeling entities mentioned, citations, sentiment, themes, etc.

- Analyze the dataset for insights.

- Repeat steps 2–4 over and over again to gauge consistency and spot trends.

Now we’re working in the realm of possibility—but that’s a lot of tedious manual work.

This is where AEO tools come in handy.

AEO tools are little golem go-betweens

AEO tools automate the process of entering prompts, scraping responses, and presenting that data back to you so that you can find insights.

They’re like little golems, tirelessly feeding prompts into AI search platforms and bringing responses back to you for your review. The perfect AI visibility tool will flawlessly and consistently execute every mundane, repetitive task in this process, leaving only the parts requiring common sense and expertise to you.

Unfortunately, we don’t have the perfect AEO golem yet.

However, some of these golems are better at handling repetitive tasks than others. The real differentiation among AEO tools is in how well they can do two things:

- Give you a reliable approximation of your brand’s AI visibility for a defined sample of prompts

- Save you time by making prompt/response data easier for you to interrogate for insights

The top five AI visibility tools do a decent job at the first. (Semrush doesn’t give you much control when it comes to defining your reports’ sample parameters; Ahrefs requires a lot of trial and error querying to try to get there, and there’s no guarantee you will.)

But when it comes to saving you time and helping you get to the insights, different tools deliver (or fail) in different ways.

There are many ways an AI visibility tool can help you save time:

- Giving you out-of-the-box metrics and dashboards

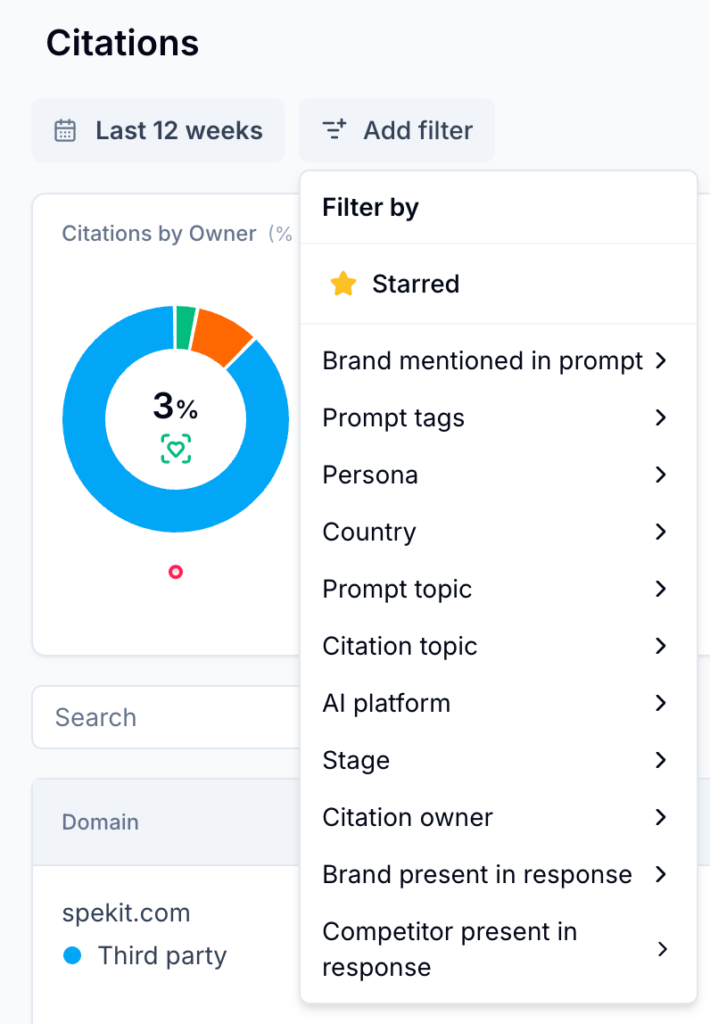

- Letting you build a tagging architecture for cross-cutting visibility data

- Giving you drill-down analytics that get you to the specific information you’re looking for

- Consistently labelling your data so you don’t have to manually clean it up

Now that we’ve covered what these tools are and how they work, let’s take a look at what kind of value you should expect to get if you use one of these AI visibility tools.

Do AI visibility tools actually deliver value?

You get out what you put in, folks:

- An AI visibility tool can show you how LLMs treat your brand and products—but only if you feed it prompts that people would reasonably use.

- An AI visibility tool can give you an idea of how your content is cited in AI responses—but you’re either getting a narrow slice of that information or manually filtering out a lot of noise.

- An AI visibility tool can give you the data you need to compare your approximate visibility to competitors—but only if you build both a sample prompt set and a reporting workflow that accounts for your own bias.

- An AI visibility tool can give you some zero-click marketing reporting metrics—but turning those metrics into anything meaningful is on you.

Thoughtful and thorough marketing teams can use these tools to gather meaningful insights into how their brands and products are showing up in AI. But getting real value out of these will take a concerted effort in the setup, monitoring, and reporting methodologies.

However, there is one way that these tools can conceivably deliver immediate value: you can use any one of these tools to get management off your back about AI search … for now.

(That may sound like a joke. It’s not.)

There’s a lot of anxiety around GEO. Deferring that anxiety gives your organization a chance to focus on activities that actually return results. These tools will give you metrics and charts that you can immediately use to answer managers when they ask, “How are we doing in AI search?” For many managers, simply knowing that you’re doing something is enough for now.

But don’t expect to lean on this for too long.

Cautions and caveats for teams using AI visibility tools

By the time 2026 is over, many marketing teams will have to use AI visibility tools and/or GEO agencies. If you’re reading an article like this, I assume you’re one of them.

So let’s address a handful of things you should be cautious about when you, your team, or your agency starts using these tools.

1. The books are cooked from the get-go

No matter how you slice it, until Chat GPT and other AI platforms start sharing general prompt and response data, any AI visibility tool’s reports will come with an inherent bias. For some (i.e., Brand Radar and Semrush AIO) that bias comes from the decisions the tools make for you, but for most (i.e., the top five tools in this report), the bias will come from the sample prompt set you choose.

It seems easy to plug prompts into one of these AI visibility tools. “What are the best [my product category] tools?” “What are the pros and cons of [my brand]?”

In reality, it’s extremely easy to get your AI visibility tool to give you meaningless reports, especially if you just accept all the AI-generated prompts they suggest you use on setup..

Remember, these tools are little golems. They don’t really know anything about you, your market, or your marketing strategy. They’re just feeding your prompts to AI platforms and bringing back the responses.

The prompts you choose directly influence the responses you get.

A sample prompt set that includes more total mentions of your brand than any other competitor will return more mentions of your brand than any other competitor, and that will inflate your share of voice metrics and obscure true competitive opportunities.

As of now, there’s no reliable way to get an AI visibility report that is both immediately helpful and unbiased. (Ahrefs is working on it, but they’re not there yet.) To recommend taking AI visibility reports with a grain of salt would be an understatement.

2. Expect difficulties in measuring progress

You’re likely going to change your sample prompt set over time. Unless the tool you’re using has strong segmentation capabilities, and you use them intentionally to account for this effect, this will skew your month-over-month numbers.

You can mitigate this problem with a strategic tagging architecture and strong reporting methodology. But in order to fully sidestep this problem, you’ll have to build those systems in a way that accurately anticipates the ways you’ll change your sample prompt set in the future.

The future is notoriously difficult to predict, so expect your first year on AI visibility tools to be rather squishy on reporting as you find your groove. And plan to provide clear, robust explanations for every report to guide readers through a field of misinterpretation landmines.

3. AI visibility tools are extremely susceptible to manipulation

These tools make it very easy for users to manipulate reports by tinkering with the sample prompt set. The following examples would all work in our report’s highest-scoring tools:

- You want a higher share of voice score? Just add prompts that elicit mentions of your brand and no one else’s, like, “What do thought leaders say about [my brand]?”

- You want more mentions in responses to unbranded prompts? Add a few prompts along the lines of, “What are some [my product category] companies in [town where my brand is headquartered]?”

- You want better sentiment numbers? Add more prompts like, “What advantages do companies in [target market] get from using [my product]?”

Hopefully, your marketing team or GEO agency won’t do this. But if these are the KPIs you’re looking at, it’s important to know that they can be very easily manipulated.

4. Focus on the AI platforms your audience uses

Most of these tools come with limitations on prompt count, platforms monitored, or both. But you probably don’t need to track your visibility across every single platform.

Instead, focus your energies on the platforms that your audience is most likely to use.

If you don’t know which platform that is, you can look that up using Sparktoro. This tool will tell you which search channels your audience uses, as well as how much more likely they are to use said tools than the average internet user in your region.

For example, when it comes to people in the USA who visit our website, almost all of them use Google. But our audience is 24% more likely to use ChatGPT than the average US internet user—and more than 160% more likely to use Perplexity and Claude! We’re a lot more interested in how we show up in these AI channels than, say, Gemini or Meta AI.

Keep this in mind as you choose an AI visibility tool.

OK. Are you ready to see how we got to those scores I mentioned at the beginning?

Our methodology for comparing AI visibility tools

Our main question as we started this report was, “Which AI visibility tool would we be most likely to use ourselves and recommend to our clients?”

Ideally, a tool like this would provide marketing strategists with information that complements the other tools they already use. These tools should pull their weight when it comes to creating and managing marketing strategies—especially since several of these are more expensive than the tools we already rely on (Ahrefs, Sparktoro, BuzzSumo, etc.).

So we created a hypothetical client project, and scored all seven of these tools on how they contributed to it.

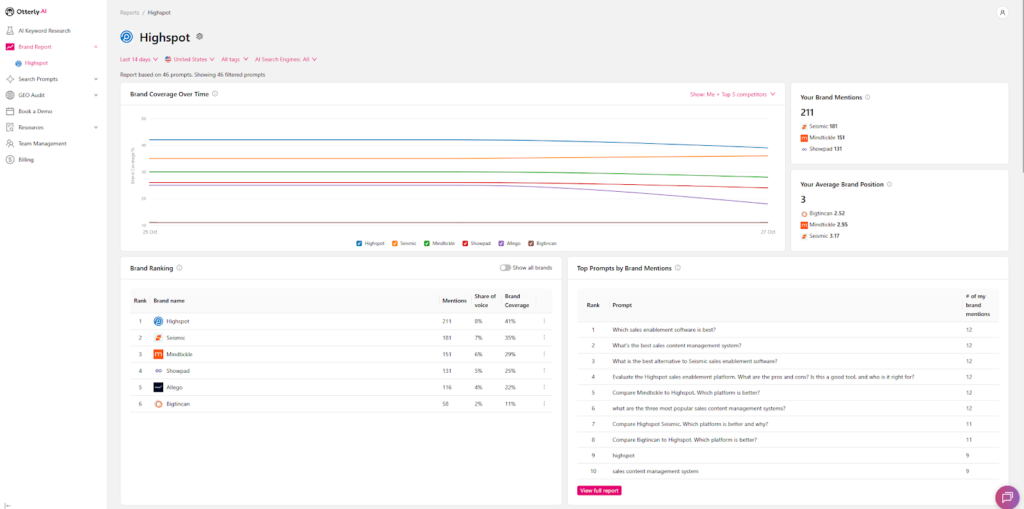

Test project: Highspot’s AI search visibility

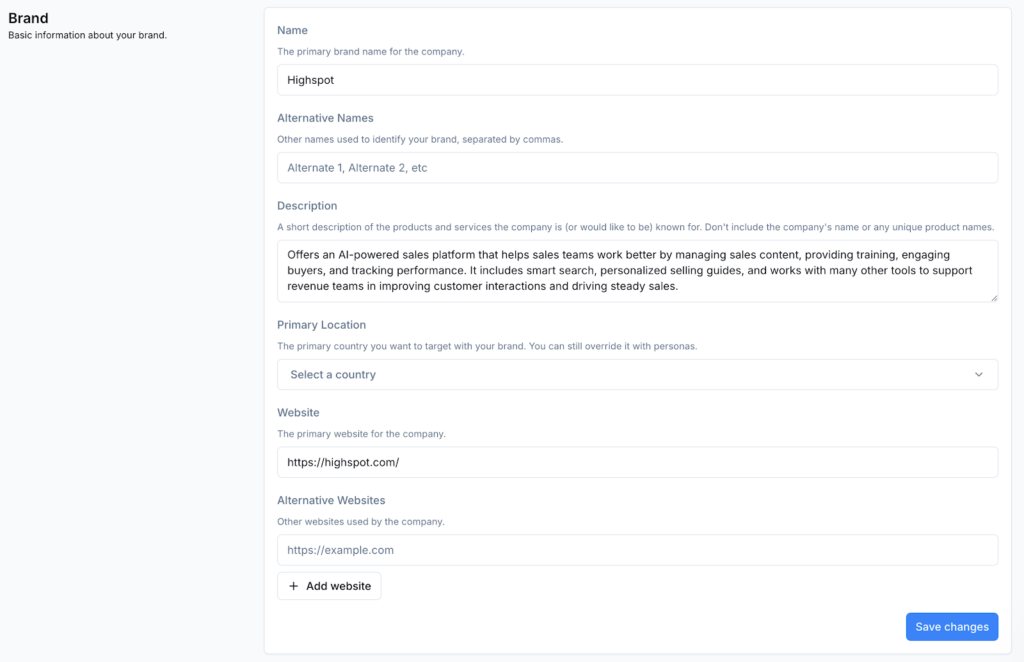

We imagined what we would do if a moderately large B2B SaaS company contracted us to create a content and messaging strategy. So we chose HighSpot as our hypothetical client: they’re relatively well-known, they’ve defined their product category well, and they have a very obvious set of direct competitors.

Next, we imagined what kind of information we would want to provide if Highspot asked us the question, “How are we doing in AI search?” This became a brief that we filled out using every tool (or at least as much as we could, given each tool’s capabilities).

The basics of what we attempted to do with all these tools:

- Define a target brand, branded terms, and competitors.

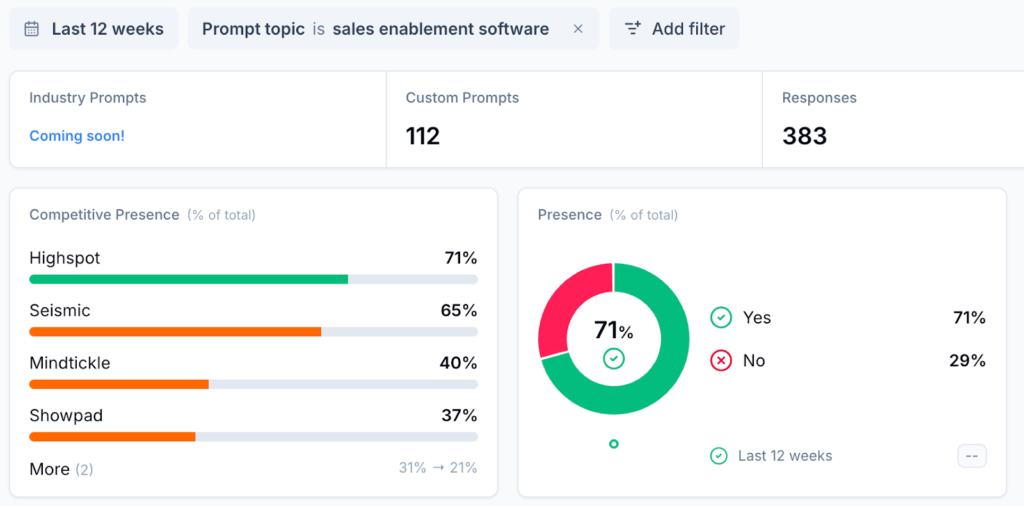

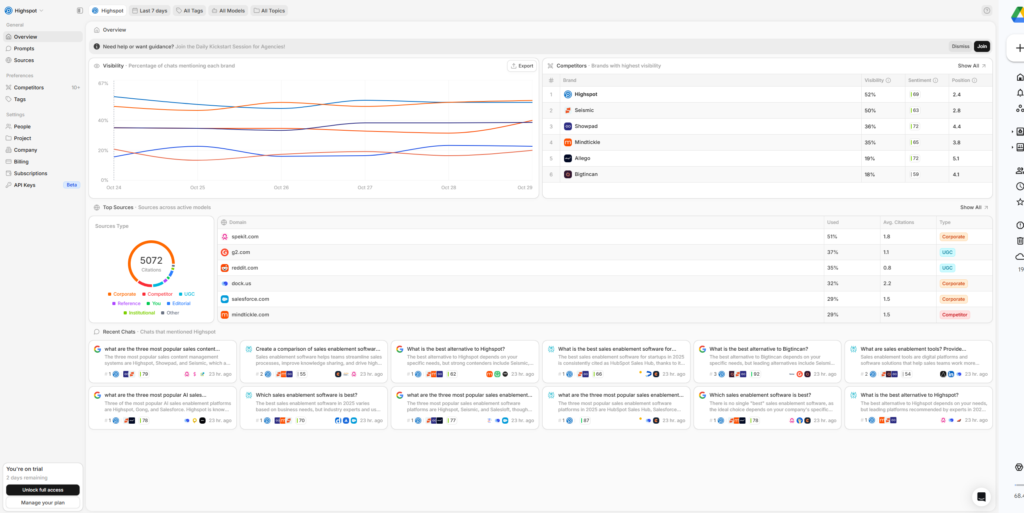

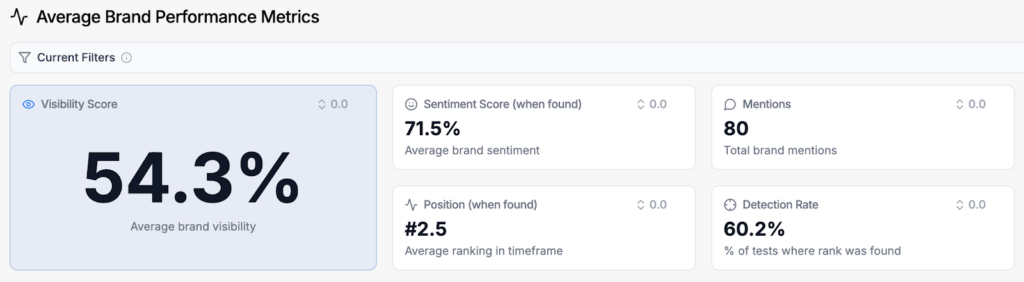

- Report on general AI visibility (mention rate, share of voice, citation rate, sentiment, etc.).

- Segment AI visibility reports. (E.g., how does Highspot show up when people ask for alternatives to competitors? How do they show up in prompts about their product category?)

- Compare Highspot’s visibility to that of direct competitors.

- Find opportunities for increasing visibility.

This information didn’t necessarily have to come out of the box from an AI visibility tool. We’re used to exporting data from marketing tools, applying layers of manual insight, and then creating our own reports for clients. We didn’t expect to pull screenshots from these tools into our slide decks—we just wanted to know how difficult (and/or possible) it would be to find meaningful, useful information about how a brand shows up in AI channels using these tools.

Scoring AI visibility tools

The test project amounted to a list of 20 tasks we attempted with each of the AI visibility tools. Examples of these tasks include:

- Define search parameters

- Label and segment prompt data

- Generate head-to-head competitor sentiment comparison

- Investigate citation reports for content opportunities

We performed these tasks (when possible) across the tools, and gave each tool a grade from zero to five.

Each task had several factors that affected scoring. For example, a tool’s score in “Define competitors” included its options for manually specifying competitor information (could you add specific branded terminology and variants, or were you restricted to a single name and domain per competitor?), the helpfulness of tool suggestions, and the ability users had to manually override the tool’s suggestions (e.g., blacklisting competitors, controlling the quantity of competitors displayed, etc.).

While the scoring factors differed from task to task, grades of zero to five on each task had the same meanings:

| Grade | Grade description |

| 5 | The tool performs well on all associated scoring factors, the task can be done in-tool (without exports). We would use this tool and recommend it to clients for this task. |

| 4 | The tool gets the job done on the most important associated scoring factors, but the data requires manual work, validation, and/or interpretation in order for the tool to contribute to the project. |

| 3 | The tool is technically a passable candidate for the task. However, it underperforms on some scoring factors. We would neither use this tool nor recommend it to clients for this task. |

| 2 | The tool’s coverage and/or execution of scoring factors is too weak to be considered a viable candidate for the job. |

| 1 | The tool signals that it is capable of performing the task, but is frustratingly deficient. |

| 0 | The tool cannot be used for this task. |

Of course, not all tasks are of equal importance. We assigned each task a weight percentage, so the more important the task was, the more a tool’s performance on it would contribute to the final score.

(For us, importance came down to how vital performing a certain task was to making meaningful contributions to a marketing strategy, as well as inputs from clients and other marketing professionals. We’ll dig into those high-importance areas in the next section.)

Perfect performance on every task would result in a final score of 5.The highest score was 3.6. Some of these tools perform handsomely in a few categories, but none of them felt like across-the-board winners.

Critical AI visibility tool capabilities to look for

You can probably tell by now that the current state of AI visibility tools isn’t all that impressive when it comes to their ability to inform strategists in a meaningful way.

However, you can’t forego AI visibility reporting for much longer. In fact, if you’re reading this, odds are good you’ve been told you have to start reporting on GEO activities.

So if that’s your situation, we recommend focusing your buying criteria on a tool’s capabilities in the following areas.

1. Segmentation architecture

There is nothing more important to look for in an AI visibility tool. Full stop.

No marketing team should be simply reporting on general AI visibility. People use AI search channels to answer different kinds of questions, and segmenting your visibility data is key to getting an actual understanding of how your brand is performing or finding any actionable insights.

All of these tools (in their current states) are rather weak when it comes to data interpretation, and most lack capabilities to filter and save useful reports in-tool. These tools are not operating at the workbench level yet: most users will end up exporting data and then working with it in spreadsheets.

Therefore, in-tool data segmentation will directly affect how much manual heavy lifting you’ll be doing in those spreadsheets. The more you can tag and segment your prompt and response data in the tool itself, the less headache you’ll have when dealing with reporting.

You need a tool that lets you tag prompt/response data.

Some tools are much better at this than others. At the very least, a tool should allow you to quickly and intuitively apply custom tags to the prompts you’re running reports on. Stronger tools will give you the option of bucketing prompts by topics and personas. The best performer in this category (Scrunch) uses AI to recognize different prompt attributes (intent, funnel stage, branded/unbranded, etc.).

All in all, tasks related to segmentation architecture accounted for 40% of a tool’s overall grade. Here’s how tools stacked up on segmentation tasks:

| AI visibility tool scores: Segmentation architecture capabilities | ||

| Tool | Grade (weighted) | Notes |

| Scrunch | 4.1 | Automatically identifies branded prompts and funnel stage, allows you to filter by various attribute types for in-tool reports |

| Profound | 4.0 | Drill-down reporting, allows you to label and segment domains |

| Peec | 3.6 | Clunky tagging mechanism, but technically gets the job done |

| Otterly | 2.5 | Limited to single-tag–only reports |

| Rankscale | 2.2 | Passable tag-based reporting, unintuitive tag setup and management UX |

| Semrush | 2.0 | Includes tagging and grouping capabilities, but only in their Guru plan |

| Ahrefs Brand Radar | 0 | Sadly, this isn’t really what Ahrefs is set up to do. |

2. Defining parameters for AI visibility monitoring

As of now, no tool can give you a reliable universal view of real-world prompts and responses, let alone their associated volumes. This means an AI visibility tool’s utility comes down to your ability to control what you’re reporting on. The more control you have over setting these parameters, the more you can rely on the tool’s reports on mention rate, citation rate, share of voice, etc.

The things you will want to define include:

- A target entity (usually your own brand, product name(s), domain(s), etc.)

- Your competitors

- The prompts you monitor (and suppressing unhelpful noise)

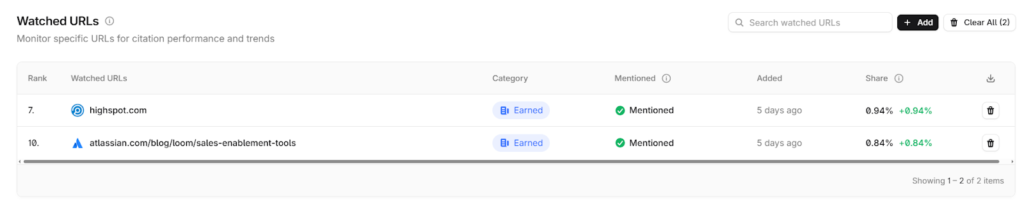

The very best performer in this area (Profound) does something especially neat: you can create a watchlist of certain URLs whose AI citations you want to track over time. It’s surprising that nobody else is doing this, because this is one of the key ways that we would want to use a tool like this. For example, don’t you think I’d like to know how often Perplexity cites this report when it responds to the prompt, “What’s the best tool for monitoring how my brand shows up in ChatGPT”? You bet I would.

That’s not to say you couldn’t get to that data through other tools: it would just take a lot of work. You’d likely end up exporting citation reports and searching them for the specific URL every time you wanted to check. But as it stands, only Profound lets you create a watchlist of URLs in-tool. And their watchlist functionality is quite barebones. This is the kind of functionality that needs to be built out further before AI visibility tools can offer real value as dashboards.

Parameter definition tasks account for 26% of a tool’s final grade. Here’s how they all stack up in this area.

| AI visibility tool scores: Parameter definition capabilities | ||

| Tool | Grade (weighted) | Notes |

| Profound | 4.7 | Allows for specific URL citation tracking |

| Scrunch | 4.4 | Allows you to attach branded terms, name variants, and multiple domains to a single entity |

| Otterly | 4.4 | Allows you to attach branded terms, name variants, and multiple domains to a single entity |

| Peec | 4.1 | Doesn’t attach branded terminology and variants to entities, but generally gets the job done |

| Rankscale | 4.1 | Lots of blacklist/whitelist control when defining competitors |

| Semrush | 3.5 | No branded terminology or name variant capabilities |

| Ahrefs Brand Radar | 2.8 | Very strong name, domain, terminology, and variant tie-ins—but very weak on prompt control. |

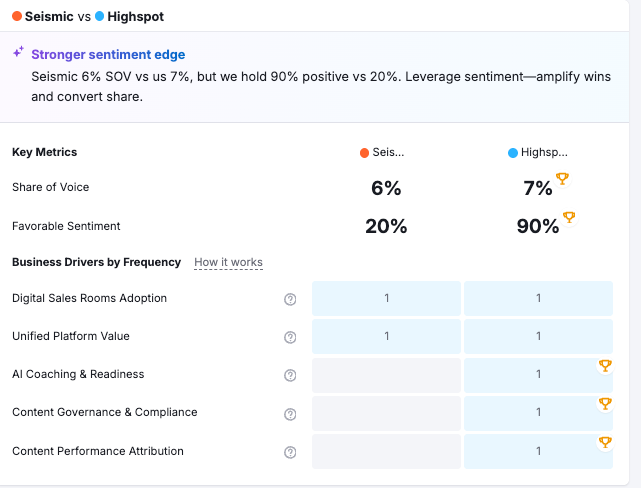

3. Competitor comparison reporting

One of the key follow-up questions any marketing leader should ask when presented with AI visibility reports is, “Well, how are our competitors doing?”

That means any tool you use should be able to generate the data you need to understand (and show leadership) how your brand’s AI visibility compares to that of your competitors. When we started this project, we assumed tool providers would account for this.

We were wrong.

There’s a big hole in the landscape when it comes to head-to-head, us-vs-them AI visibility comparison, and right now, the only player doing close to a decent job at this is Rankscale.

Still, even if tools aren’t handling this all that impressively today, comparing your AI visibility against competitors is important. The tasks associated with this account for 14% of a tool’s final score.

The pros and cons of each AI visibility tool

You’ve seen the general rankings already, but now let’s take a closer look at how these tools stack up against each other. For each of these tools, I’ve included links to their pricing pages, information on free trials, and any noteworthy accolades that came up as we were using them.

And again, please keep three things in mind when looking at these scores:

- This is a new product category, so all of these tools have improvements on their roadmaps.

- The most useful information about AI visibility (real-world prompt and volume data) is unavailable to these tools.

- These tools cost a lot to run, and those costs are passed on to the customers—several of these are often more expensive than the tools content marketing strategists rely on for truly useful data.

We’ve included the price point at which you’ll likely need to use the tool if you’re doing serious marketing strategy. Most of these tools have a low starter plan, but that’s exactly what it should be considered: a starter. Most marketers will outgrow the lowest-tier plan very quickly.

1. Profound

Final score: 3.6

Pricing: $399+/mo (free 7-day trial)

Profound is the most well-rounded tool we reviewed. You can build sophisticated segmentation architecture, label and segment domains, and define your prompt parameters with relative control. This gives you a lot of options when it comes to slicing your data.

Plus, Profound’s ability to recognize sentiment in AI response snippets and group those snippets by theme is also especially useful.

Big win: watch specific URLs for citations

Profound allows you to track specific URLs for citations, and it’s the only tool we reviewed that does this.

In terms of real-world usefulness, this is huge. When you publish a piece of content designed to gain visibility for specific types of search, Profound can track its performance against the prompts you choose.

Not good: AI nonsense pollutes sentiment analysis

Profound has a pesky problem when it comes to sentiment analysis. The tool generates sentiment reports based on a wide range of nonsense prompts that the tool searches on your behalf—not the sample prompts you originally selected.

For example, here’s Profound telling us about how ChatGPT cites “high cost concerns” as a source of negative sentiment, and it actually highlights the text that it’s pulling that sentiment from. However, it’s in response to the prompt, “Evaluate the Sales Enablement company Highspot on Highspot.”

What?

This means that although their sentiment response analysis tool is one of the most advanced in the pack, most of the sentiment analysis is based on responses to prompts that no human being would ever use. It’s very well-packaged noise, but it’s still noise.

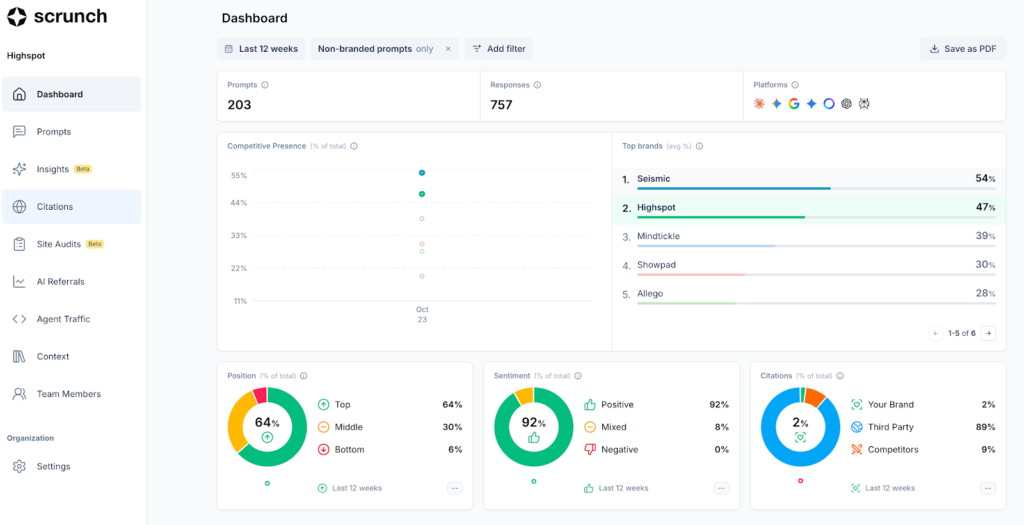

2. Scrunch

Final score: 3.4

Pricing: $250+/mo (free 7-day trial)

Scrunch seems attuned to the real needs of SEO and content strategists. Specifically, they have the most helpful tools for segmentation architecture and filtered reporting that we saw during this project—and their parameter definition capabilities are strong relative to the rest of the pack too.

Best segmentation capabilities

Scrunch applies an extremely helpful layer of AI assistance to segmentation data. You don’t have to manually tag prompts as “branded,” or by marketing funnel stage: Scrunch does this for you automatically.

Scrunch also lets you use this data to generate filtered reports directly within the tool, which means if you have a strong tagging system, you can generate several screen-grabable reports quickly.

Strong entity control for multi-domain brands

Scrunch also lets you tie multiple web domains to a single entity. This means if your brand operates multiple websites, you can track citations to all of them as part of your unified target brand.

Most teams won’t need this, but as someone who used to work on a marketing team responsible for more than 10 different websites, I appreciated seeing this.

Weak drill-down analytics

For all its filtered reporting, Scrunch reports are rather shallow. There is little opportunity to drill down into the charts they show you—and sentiment data is only available at the individual prompt level.

3. Peec

Final score: 3.2

Pricing: €199+/mo, or about $230 USD (free 7-day trial)

Peec is the final player who managed to score above 3 in this list. The tool isn’t especially impressive compared to Profound and Scrunch, but it’s a better overall option than the lower-scoring four players.

Unlimited seats, unlimited regions

While it’s not the strongest tool, it’s very affordable. The higher-ranking tools charge extra for more seats and countries, but Peec gives you unlimited seats and reports on activity in unlimited countries, even at the least expensive level.

Less-than-helpful automatic labeling

Peec tries to help you by automatically labeling domains and content types. Unfortunately, Peec doesn’t always do this in the most helpful way.

URL and domain typologies are treated as mutually exclusive categories, which means your data is almost always incompletely labelled. For example, a blog post titled “The 10 Best Alternatives to Highspot” will be labeled “Article,” “Listicle,” or “Alternative,” but not all three. There’s no way to correct this within the tool, so you’ll be stuck doing that in an exported spreadsheet.

They’re clearly trying to make it easier to identify what kind of websites and what types of content get cited in responses, but we didn’t have to look very closely to realize that any reports would have to be exported and manually relabeled in order to be useful.

4. Rankscale

Final score: 2.9

Pricing: $99+/mo (7-day free trial available on request)

With all the buzz around AI visibility in the marketing world, I expected this list to be full of venture-backed startups and add-on tools from incumbent marketing SaaS companies. I did NOT expect the #4 spot to be occupied by … [checks LinkedIn] … just two guys?!

OK. Well done, Mathias and Patrick.

Using Rankscale gives you the sense that they have both a vision for the product and a desire to hear user input. They scored middle of the pack when we reviewed them, but don’t be surprised if they’re acquired soon, because …

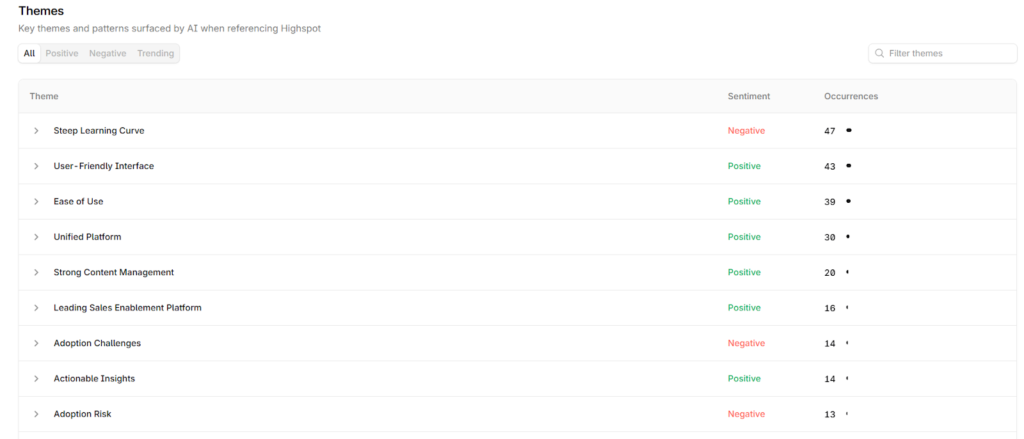

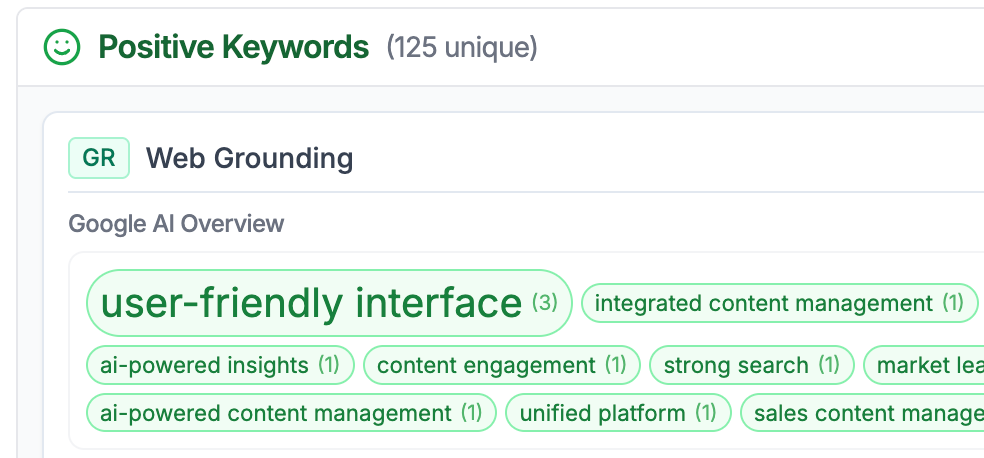

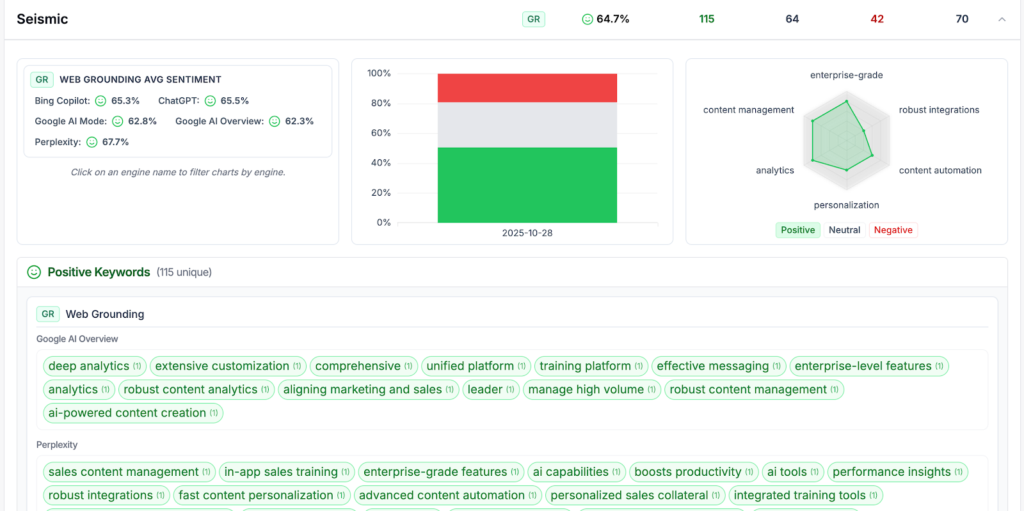

Rankscale is the best LLM sentiment analysis tool

Rankscale recognizes sentiment elements in response snippets, groups those snippets by theme, and maps these sentiment elements to specific text snippets in AI responses.

Unlike Profound, it gives you this sentiment data from the actual prompts you’re tracking, not AI-generated nonsense prompts.

Unlike anyone, Rankscale provides radar charts, giving you an easy-to-understand visual snapshot of how AI channels present your brand’s pros and cons to searchers.

(This display shows the spread of positive sentiment themes, but you can toggle it to show neutral and negative radar charts as well.)

You can run these reports on your competitors, too!

In case you can’t tell, I’m impressed.

Weak and clunky on segmentation

Rankscale allows you to group prompts by topics, but there’s no way to set up a prompt-tagging architecture. There is no simple, intuitive way to filter and bulk-label prompts with topics, either. If you’re using Rankscale to generate anything more granular than a brand-level visibility report, you’re in for a trial.

Update: I’m told that in the short time between when we tested Rankscale and when I published this piece, Rankscale has added some tagging functionality! Proof that these tools are growing up fast—and that the Rankscale team means business. 😉

5. Otterly

Final score: 2.8

Pricing: $189+/mo (14-day free trial)

Otterly is affordable (unlimited seats, unlimited reports) and comes with some decent capabilities, but you’re likely going to spend a lot of time manually working around its limited reporting options.

Very strong parameter control

Otterly’s parameter definition capabilities are Scrunch-level. You can add multiple domains, name variants, and branded terms to the entities you’re tracking in Otterly, which is especially helpful if your brand’s products aren’t the same as your parent name (or if your brand operates across multiple domains).

Clunky segmented reporting

If you want to run reports on prompts based on multiple tags, you’re out of luck.

Otterly currently only lets you run single-tag reports: you can filter your dashboard by one tag at a time. That means you’ll be building most of your reports by exporting multiple spreadsheets and pulling them together.

Furthermore, sentiment analysis is only found at the individual prompt level, and Otterly’s automatic labeling of domains in citations suffers from similar problems to Peec’s.

6. Semrush AIO

Final score: 2.2

Pricing: $99+/mo (but you’ll pay much more for a decent sample prompt set)

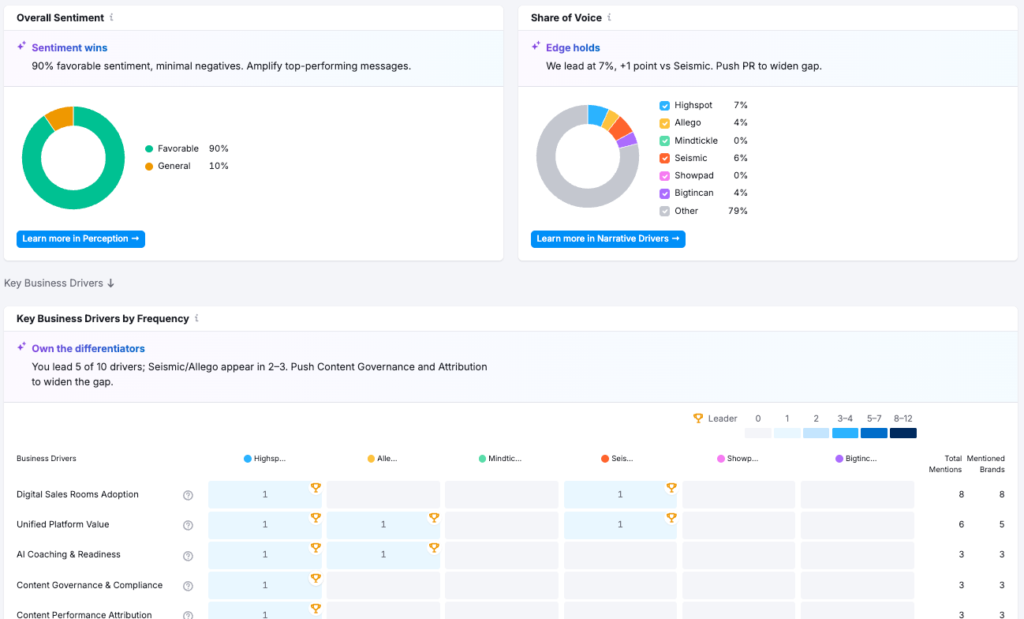

When I first opened Semrush’s AI visibility tool, I was impressed. Lots of visual reports. True head-to-head competitor comparisons. Decision drivers identified in responses, and competitors ranked based on how often they were associated with them!

It seemed too good to be true.

(Narrator: “It was entirely too good to be true.”)

Pretty reports, low information

I’ll hand it to Semrush: they know what kind of reports marketers want to be able to screenshot and drop into slide decks.

Semrush gives you plenty of eye-catching reports, but little in the way of actually interrogating them to see where the numbers come from.

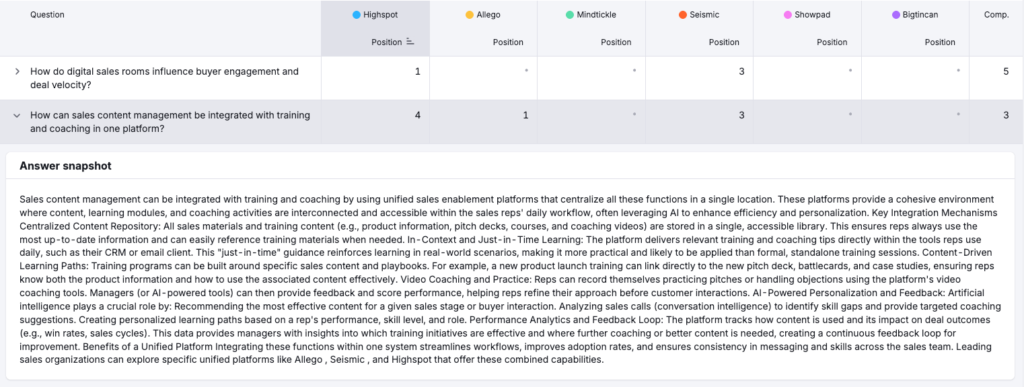

For example, this breakdown of “business drivers” that their AI has identified might look cool at first. But nothing in this is clickable (except the little ❓ icons, which show AI-generated definitions of those drivers).

You might think you could find this info in the “Narrative Drivers” tab to look for more details—except you can’t find it here.

What you will find instead is an entirely unformatted “snapshot” of AI responses to questions—with no information on the platform these responses are coming from.

Low parameter control, lots of noise

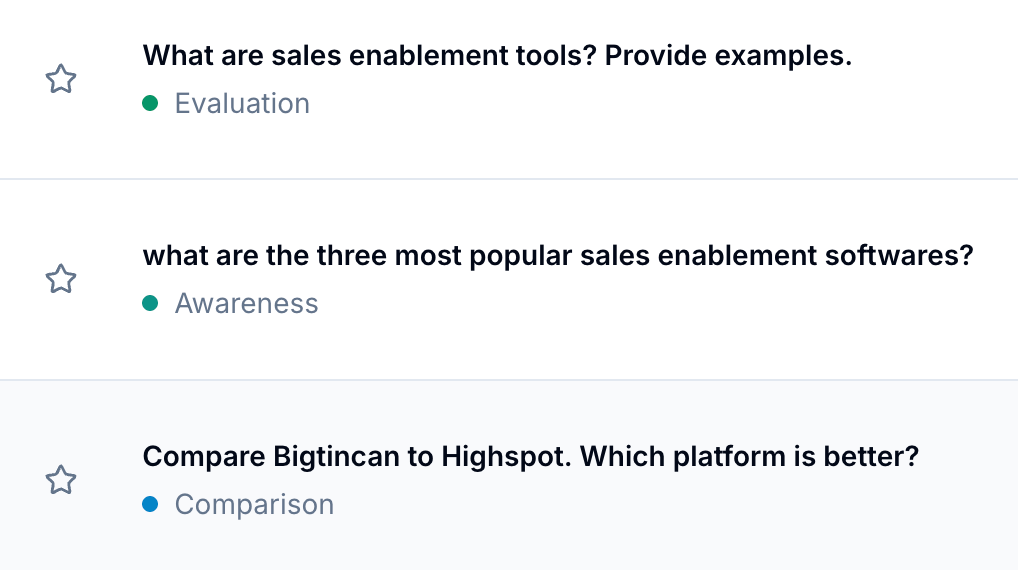

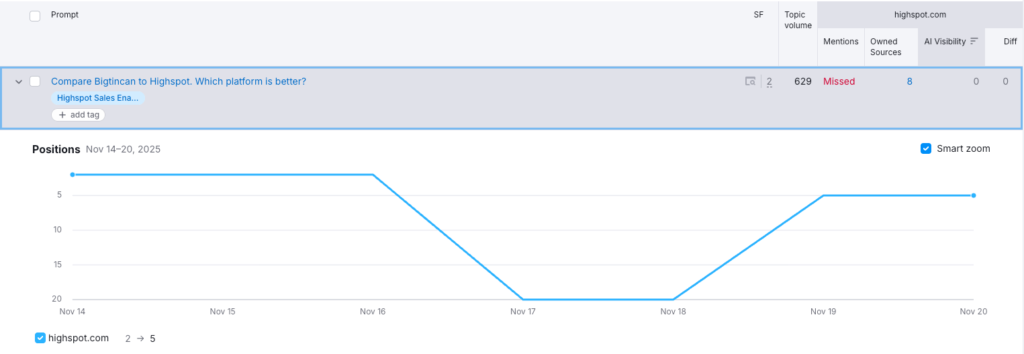

While you can set up specific prompts to track, credits for using these prompts burn up fast—and the data you get on them is suspect at best. For example, in our project we told Semrush to track the specific prompt, “Compare Bigtincan to Highspot. Which platform is better?”

Semrush claims ChatGPT doesn’t even mention Highspot in the response. That’s ridiculous, and completely inconsistent with our experience running that prompt on the other tools.

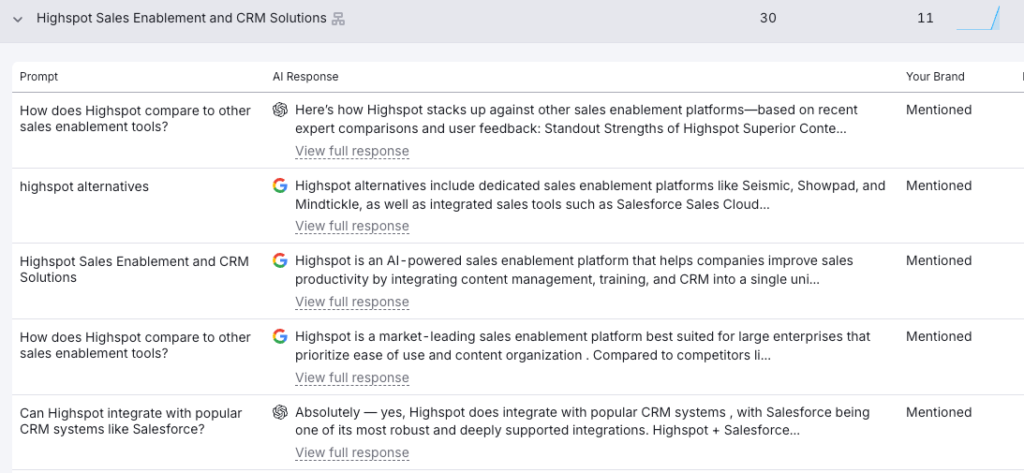

When it comes to actually monitoring widescale visibility, you’re at the mercy of whatever prompts Semrush chooses. Semrush populates your reports with response data from prompts they deem relevant to your domain inputs. Some of these prompts make sense to include in these reports:

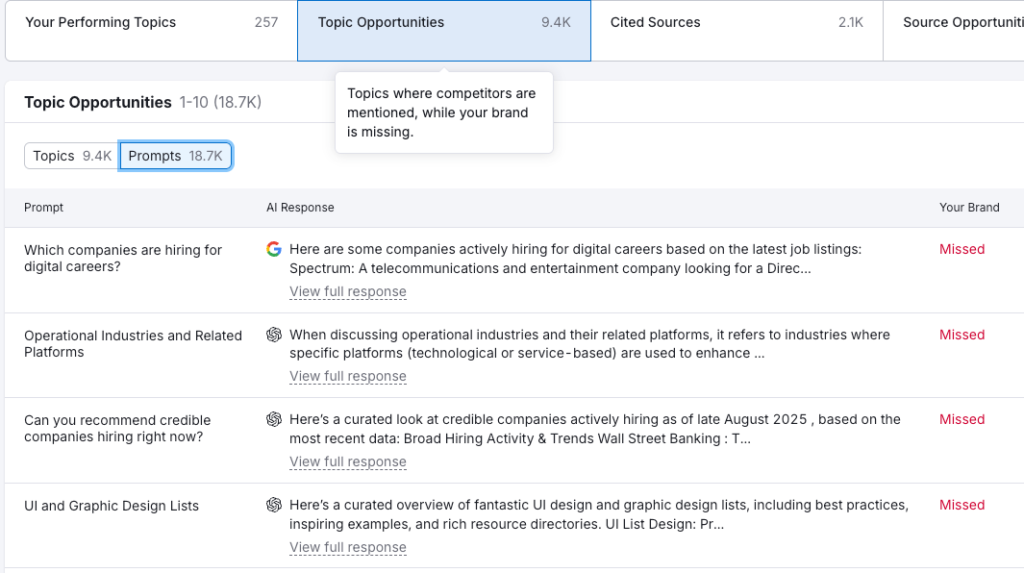

Unfortunately, you also get a lot of noise—especially when it comes to the “opportunities” Semrush pulls in for you:

This might be a workable problem if you could export this data from Semrush—but that’s not available yet.

An unending stream of AI gobbledygook

Semrush has gone all-in on the notion that report numbers mean nothing without actionable insights. Which means every report comes with some AI-generated suggestions for improvement.

And they’re … interesting.

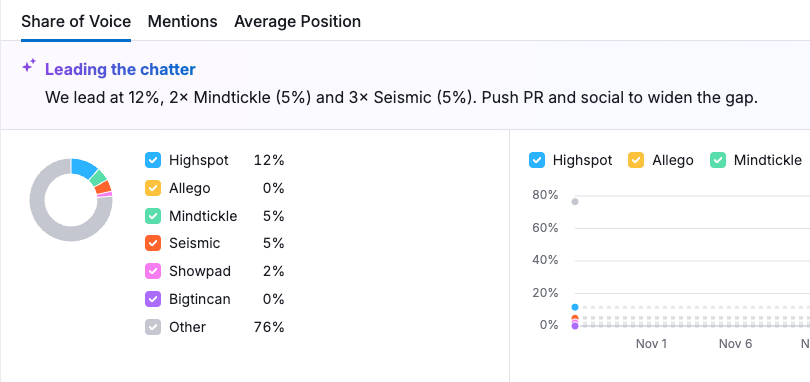

For example, did you know that 2 x 5 = 12? And also 3 x 5 = 12? That’s the kind of insight (and math) you’ll get from Semrush AI. I’m not joking. That’s real. Look.

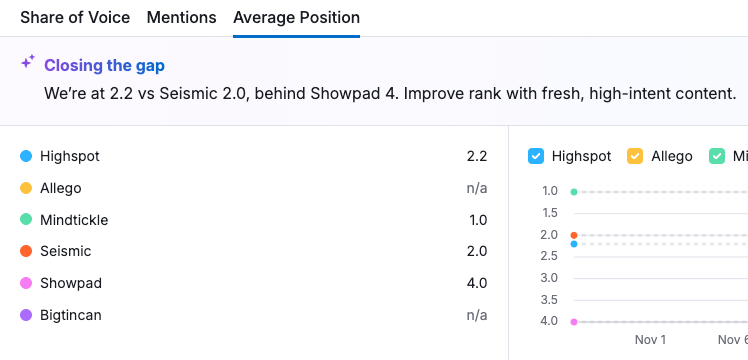

Or how about this cute example of Semrush AI not even understanding its own ranking system? Here Semrush says that both Highspot and Seismic are falling behind a competitor that they both outrank, when Mindtickle is actually the competitor they should be paying attention to:

Remember our golem analogy from earlier? Semrush is like an overeager golem that entertains itself by doing pointless, outright goofy tasks. However, this golem has no idea what it’s doing.

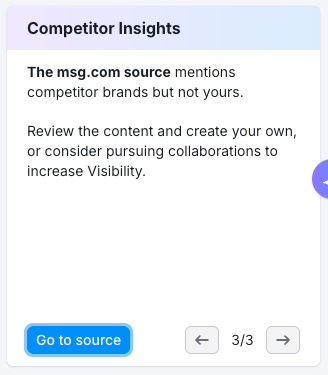

This goes beyond dumb slip-ups like this. Semrush will prioritize recommendations in the dashboard like this one:

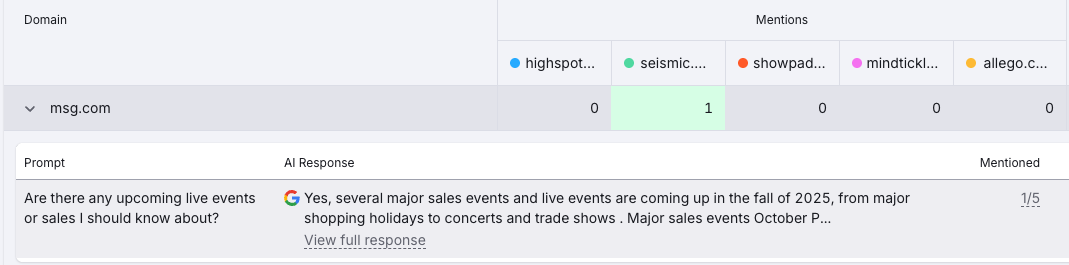

On the surface, this might look like a problem. A big domain is talking about our competitor, but not us! But if you look at the singular prompt that caused this “insight,” you can see that it’s an irrelevant prompt to begin with.

This nonsense isn’t limited to recommendations, either. The happy Semrush golem actively sprinkles this throughout your prompt and response data, too. Look at the topics Semrush assigned to these prompts.

If you use Semrush for monitoring your AI visibility, you’re going to spend a lot of time de-Semrushing your reports.

7. Ahrefs Brand Radar

Final score: 1.1

Pricing: $199/mo per platform

This was the biggest surprise for us, folks. For a decade, I’ve considered Ahrefs the most useful SEO research tool. But based on our experience with the tool on this project, Brand Radar (as it is today) is not an AI visibility monitoring tool we’d use for B2B SaaS marketing.

Their vision is one I completely agree with: measuring your brand’s visibility against synthetic prompts gives you, at best, a narrow, fuzzy, and biased picture of your visibility. Ahrefs is instead attempting to give marketers something better: data based on real prompts and real responses.

They have a database of 110 billion keywords and associated SERPs they mine for “People Also Ask” boxes, which they then enter into AI search platforms and track responses on. They have a very sophisticated querying engine for filtering reports. They keep the records of searches and responses month over month. They’ve got a lot going for them.

However, we found Brand Radar generally unhelpful.

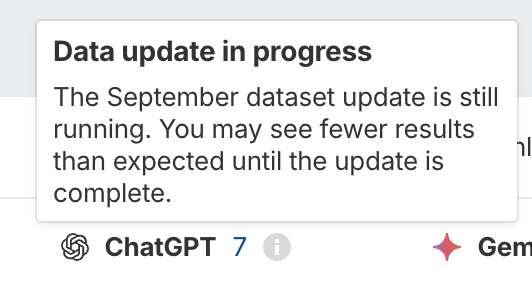

First of all, the data was lagging. When we ran our project in late October and early November‚ Brand Radar still hadn’t completed their update of September’s ChatGPT dataset.

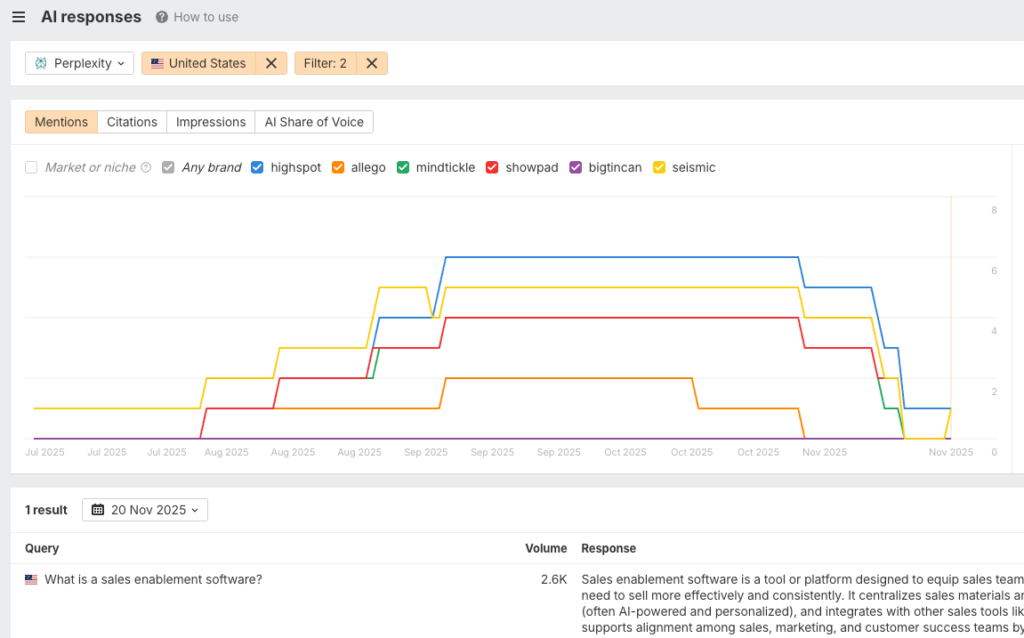

Second, you’re at the mercy of querying Ahrefs’ keyword database. This means that when it comes to Perplexity responses under the topic “sales enablement software,” I only get one response:

(The topic “sales enablement tools” has a whopping three Perplexity responses. It’s the largest topic Ahrefs found in Perplexity.)

In fact, once we look beyond Google’s AI channels, mention data seems especially low.

Conceivably, you could apply complex filtering logic to get a very refined set of search parameters. But if you’re a B2B SaaS marketer trying to learn how people in the enterprise discover your brand and competitors, Ahrefs likely won’t have enough real-world search volume to work with.

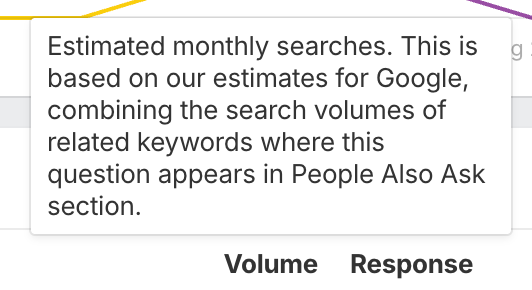

Speaking of search volume: Ahrefs attempts to estimate the monthly searches a query gets for you—but these numbers seem spurious. For example, when you look at ChatGPT response data in Ahrefs, Brand Radar gives you an estimated monthly search volume.

But they get this number by:

- Entering questions from Google’s People Also Ask SERP feature into ChatGPT as the prompt

- Looking at all the Google keywords that trigger that PAA question in their SERPs

- Adding up the search volumes of those keywords

I can imagine that the person at Ahrefs who came up with this means of calculating search volume got a lot of high fives. It’s clever. But adding up the search volumes of Google keywords that trigger a PAA question and then presenting that as the search volume for a completely different platform is … a choice.

What Ahrefs is doing is a valiant attempt, but we can’t confidently say that it’s set up to help you answer your CMO when they ask, “How are we doing in AI search?”

Final thoughts: you get out what you put in

You can’t treat any of these tools as push-button solutions. When it comes to getting value out of AI visibility tools, your marketing team is the biggest determining factor.

No matter which tool you choose, the value you get from an AEO tool will ultimately come down to the way you’ve decided to measure and track visibility. This means you’ll need to put a good deal of thought into the sample prompts you choose, and any leaders you’re reporting this to should also have an intimate knowledge of what is being measured.

“That sounds pretty involved.”

Yeah, it will be. Want some help?

The Overthink Group team and I can help you with this. We’ll work with your team to design a sample prompt set, tagging architecture, and reporting methodology to track your AI visibility. All you have to do is drop us a line.